AI Conversations beyond GPT: Mistral-7B Game-Changing Leap in Large Language Models

12 November, 2023

Large language models have revolutionized artificial intelligence in recent years. One such model, Mistral-7B, stands out for its abilities.

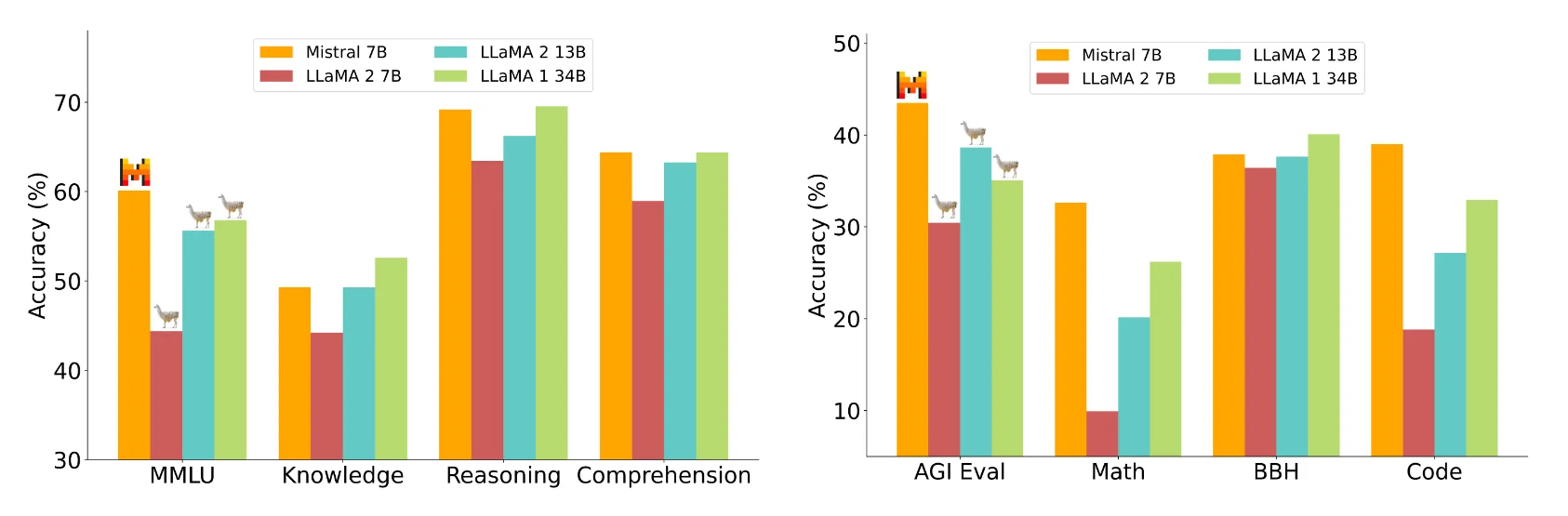

With just 7 billion parameters, Mistral-7B delivers top-tier performance at a lower cost than models with more parameters. It outperforms other 7B models and competes well against 13B chatbots.

This article explores Mistral-7B's features and how it can help. We'll look at:

How it works: Mistral-7B can generate text, summarize documents, and code using its large language model architecture.

Benchmarks: Test results show it outperforms other 7B models and rivals 13B models for conversations.

Use cases: Tasks like summarization, classification, autocompletion, and more.

A hands-on guide: We provide code for using Mistral-7B and customizing it for your needs.

Mistral-7B is a powerful and affordable AI tool. Let's Learn how its abilities can benefit you.

The Powerful Potential of Mistral-7B

Artificial intelligence has advanced rapidly, especially large language models (LLMs). Mistral-7B stands out for its abilities at a lower cost.

A High-Performing Solution

With just 7 billion parameters, Mistral-7B outperforms other 7B models and competes well against 13B chatbots. It delivers top-tier results while using fewer resources.

Comparing Mistral to Llama

The Mistral 7B and Llama are both double reed woodwind instruments, but they have some key differences. The Mistral 7B is a smaller instrument than the Llama, with a more compact body size making it easier to transport and play on the go. It produces a softer, more delicate sound compared to the fuller, richer tones of the Llama. The Llama's larger body allows it to be louder and projects better, making it preferable for performances where a strong sound carries over an ensemble or crowd.

Mistral 7B is simpler to play technically, with a gentler response that is easier for beginning players to control. Its smaller size also contributes to a lighter embouchure needed. So in summary, the Llama is better suited for louder performances where a big sound is needed, while the Mistral 7B is easier to learn on and more portable, at the cost of some projection and volume. Both produce beautiful melodic sounds but cater to different performance and playing needs.

This guide examines Mistral-7B's code setup, features, use cases, and how to customize it.

Getting Started with Mistral-7B

Learn the basics of LLMs and Mistral-7B's architecture. Then explore tasks like summarization, classification, and autocompletion.

Running Mistral 7B with Python

Follow these steps to run Mistral-7B's powerful AI:

1. Install ctransformers: This library allows loading models from HuggingFace.

2. Initialize the Model: Load Mistral-7B from HuggingFace and set parameters for Colab's GPU.

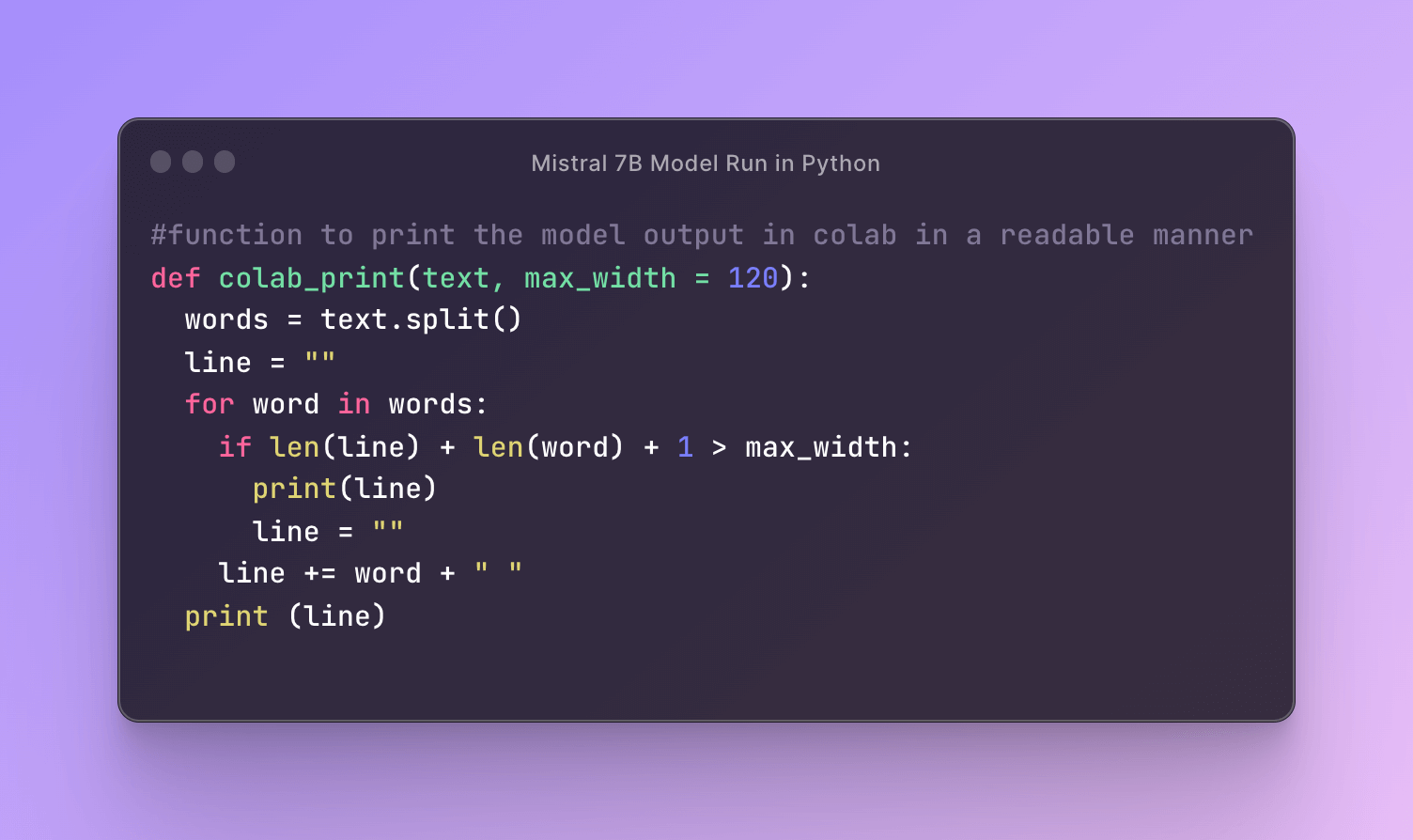

3. Format Output Function: Define a function to neatly display generation results.

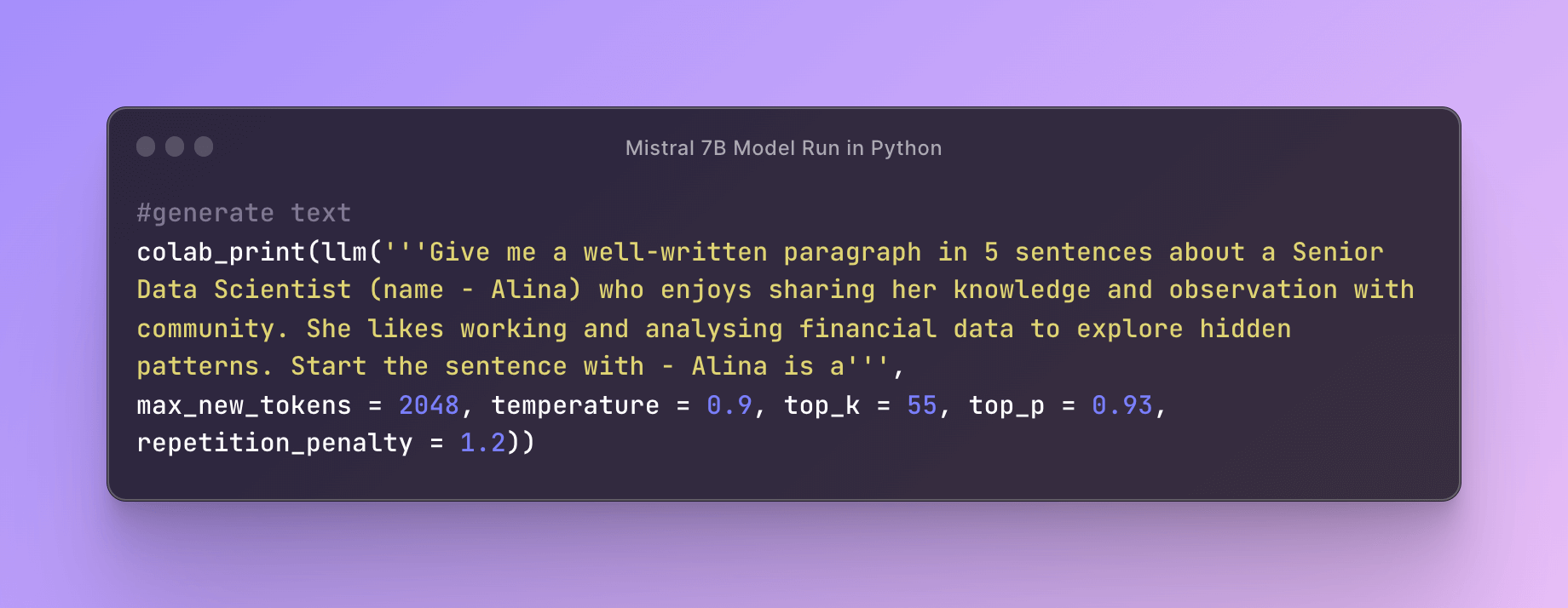

4. Generate Text: Use the model to autocomplete prompts. Tweak parameters to customize output.

Model Response:Alina is a senior data scientist who enjoys sharing her knowledge and observations with the community. She has worked in industry for over 10 years analyzing trends in the market. Alina likes using machine learning algorithms to discover patterns in the large financial datasets. In her spare time she writes a blog explaining her findings and techniques in a way that is easy to understand. Through her work and writing, Alina aims to educate others about the insights that can be gained from financial data.

Mistral-7B Use Cases

Here are some key use cases for Mistral-7B:

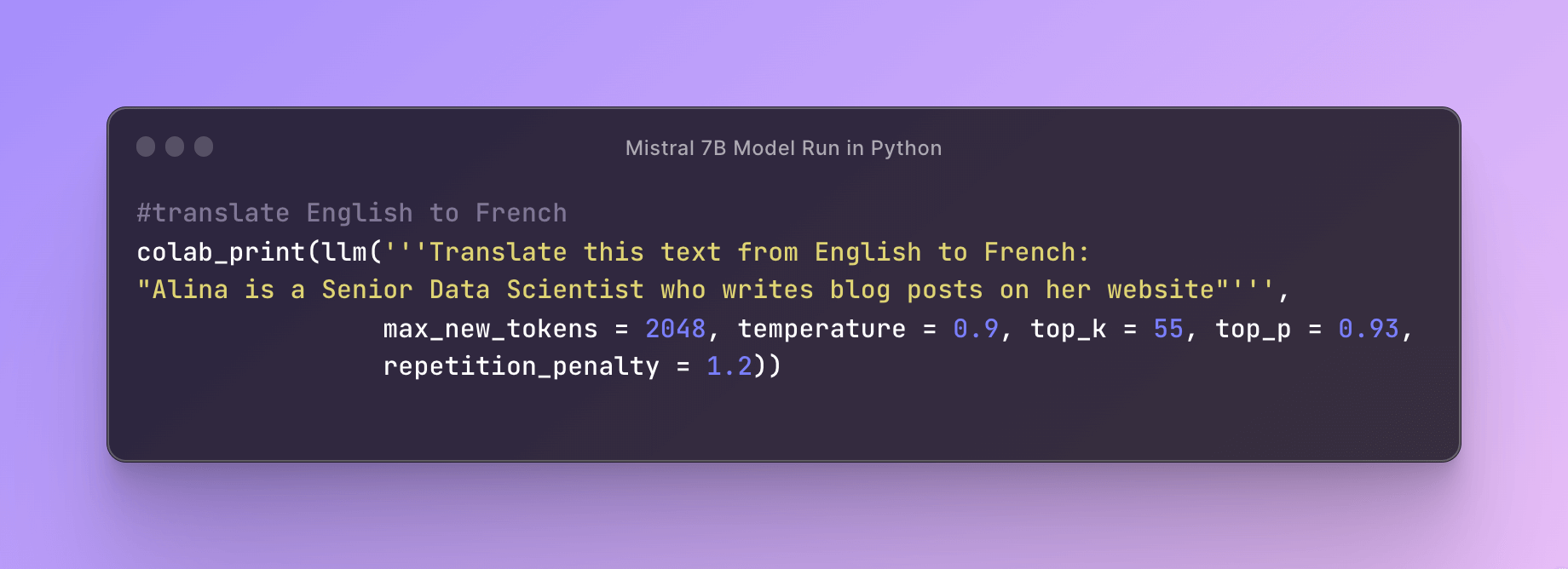

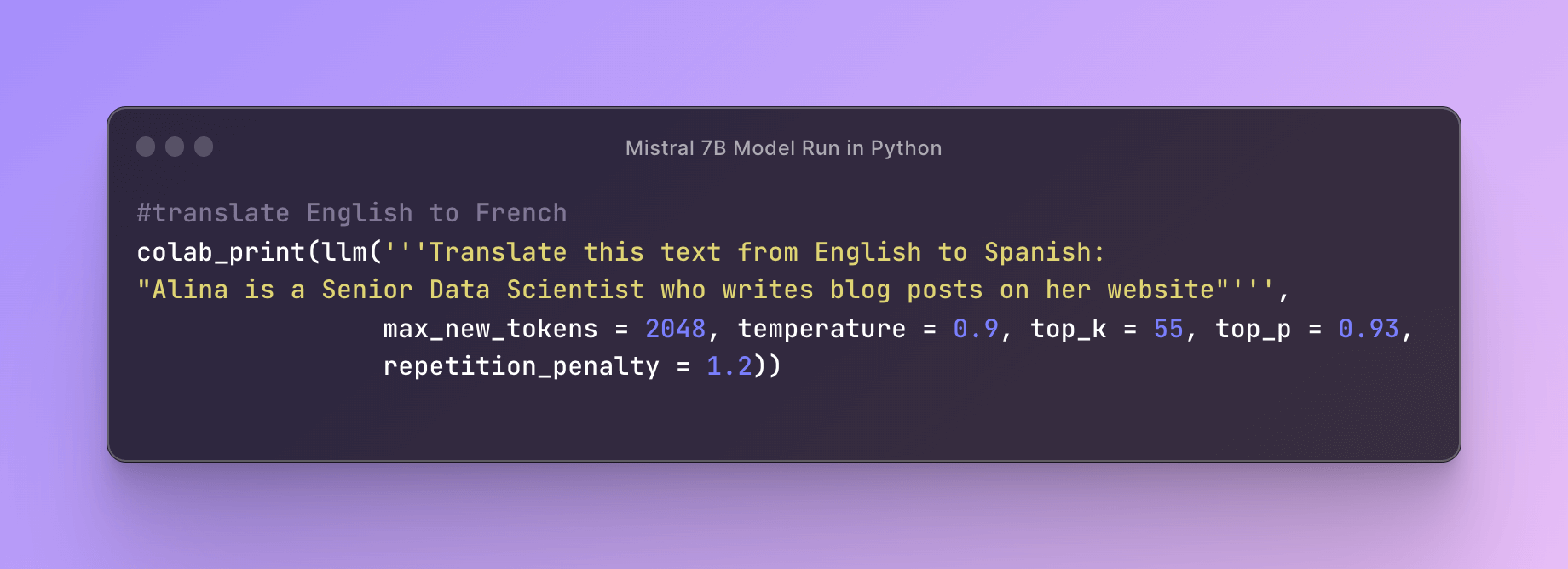

✦ Translation: Mistral-7B supports multi-language translation. Test its accuracy by translating between:

English and French

Model Response:Alina est une scientifique de données senior qui rédige des billets de blog sur son site web.

English and Spanish

Model Response:Alina es una científica de datos senior que escribe publicaciones de blog en su.

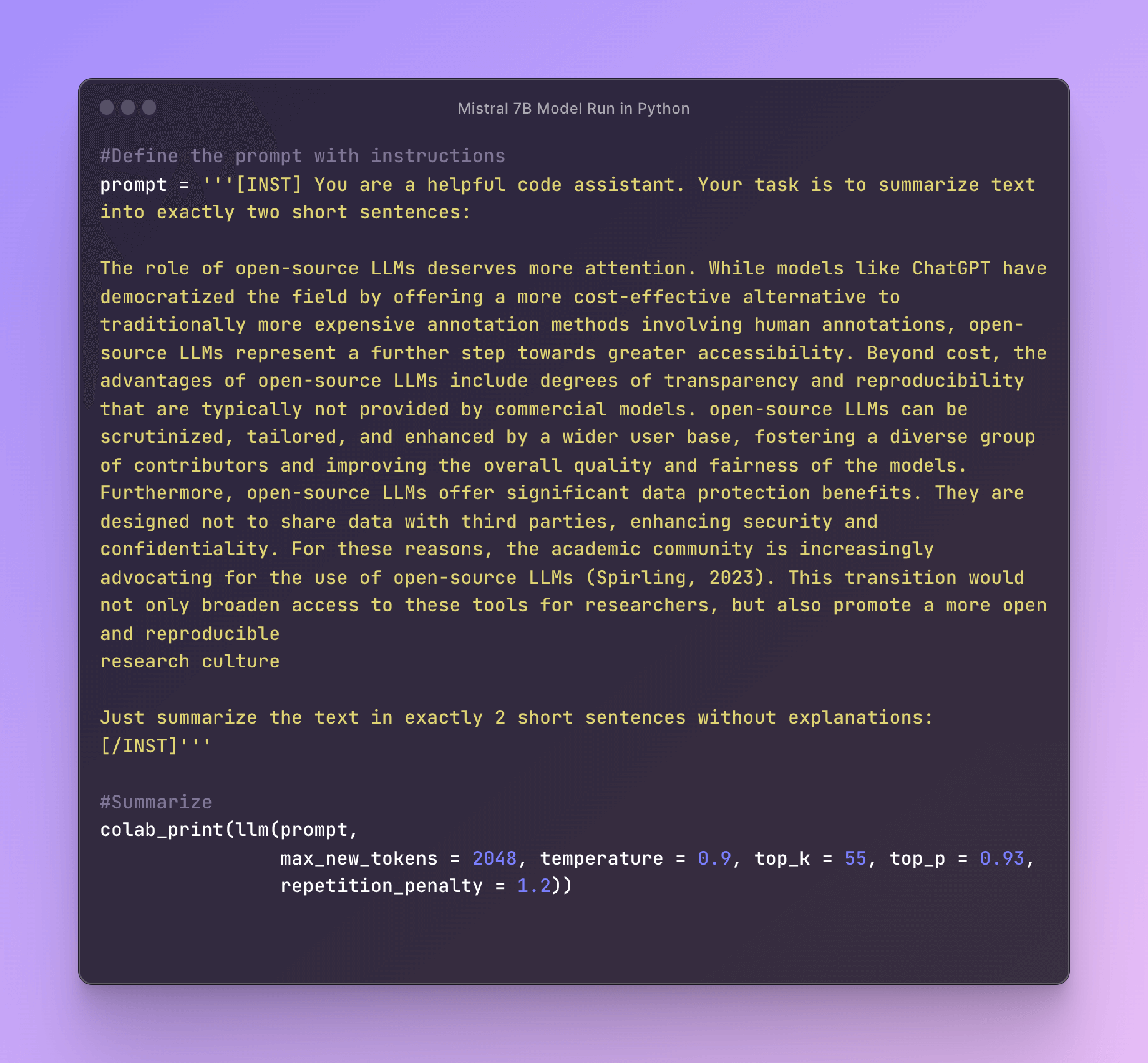

✦ Summarization: Summarize documents into shorter versions using custom instructions. Get the gist while reducing word count.

Model Response:Open-source large language models deserve more attention by democratizing AI through cost-effectiveness, transparency, and allowing customization by diverse contributors to improve quality and fairness, while also enhancing data protection. The academic community advocates for open-source LLMs to broaden access and promote a more open research culture.

✦ Custom Instructions: Use tags like [INST] to modify inputs for specific outputs. For example, generate a JSON file from a text description.

Additional Uses: Other tasks include:

✦ Classification

✦ Text completion

✦ Question answering

✦ Conversation modeling

Mistral-7B handles diverse natural language applications. You can try its abilities to transform your work with translation, summarization, or custom tasks.

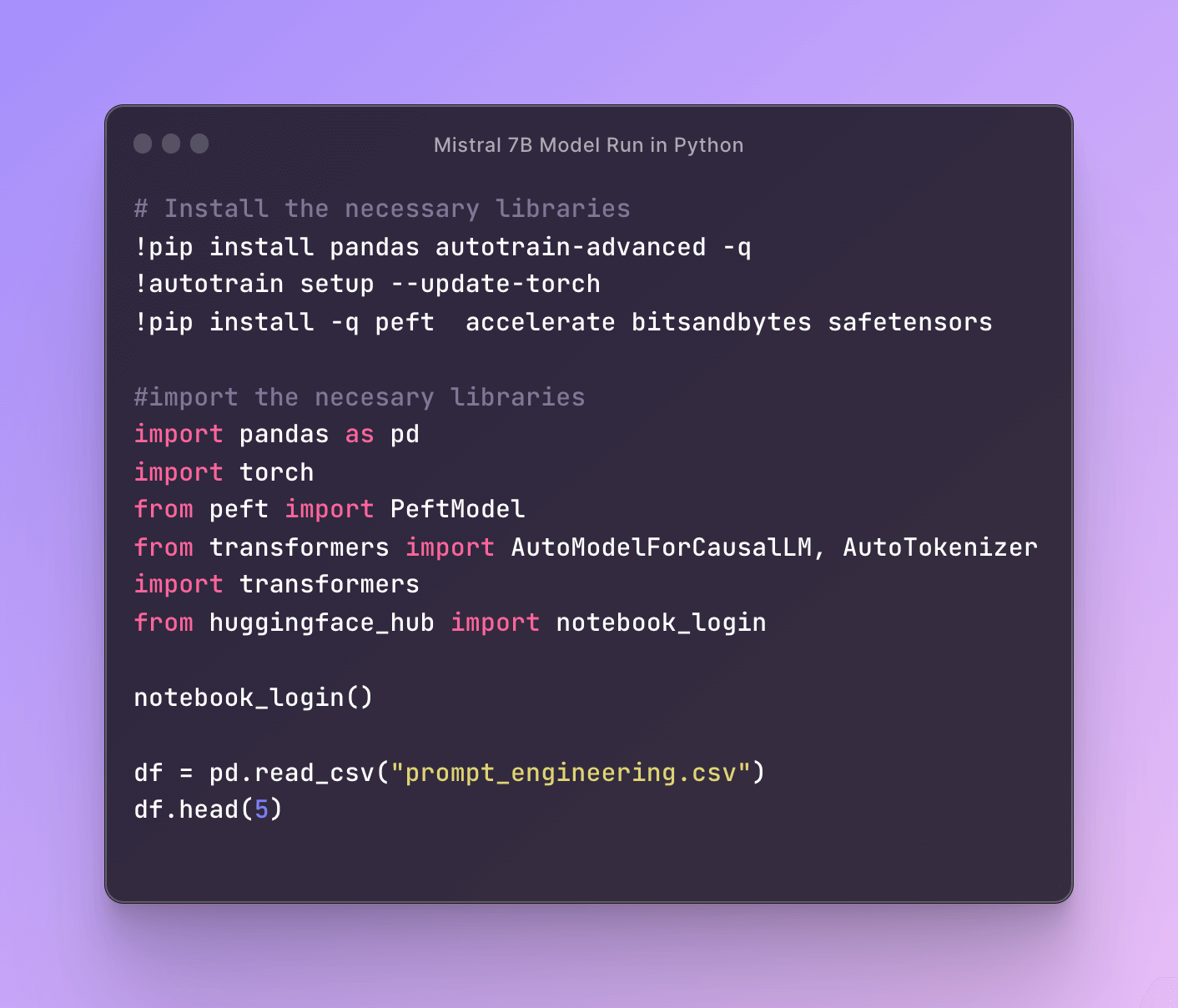

Fine-Tuning Mistral-7B

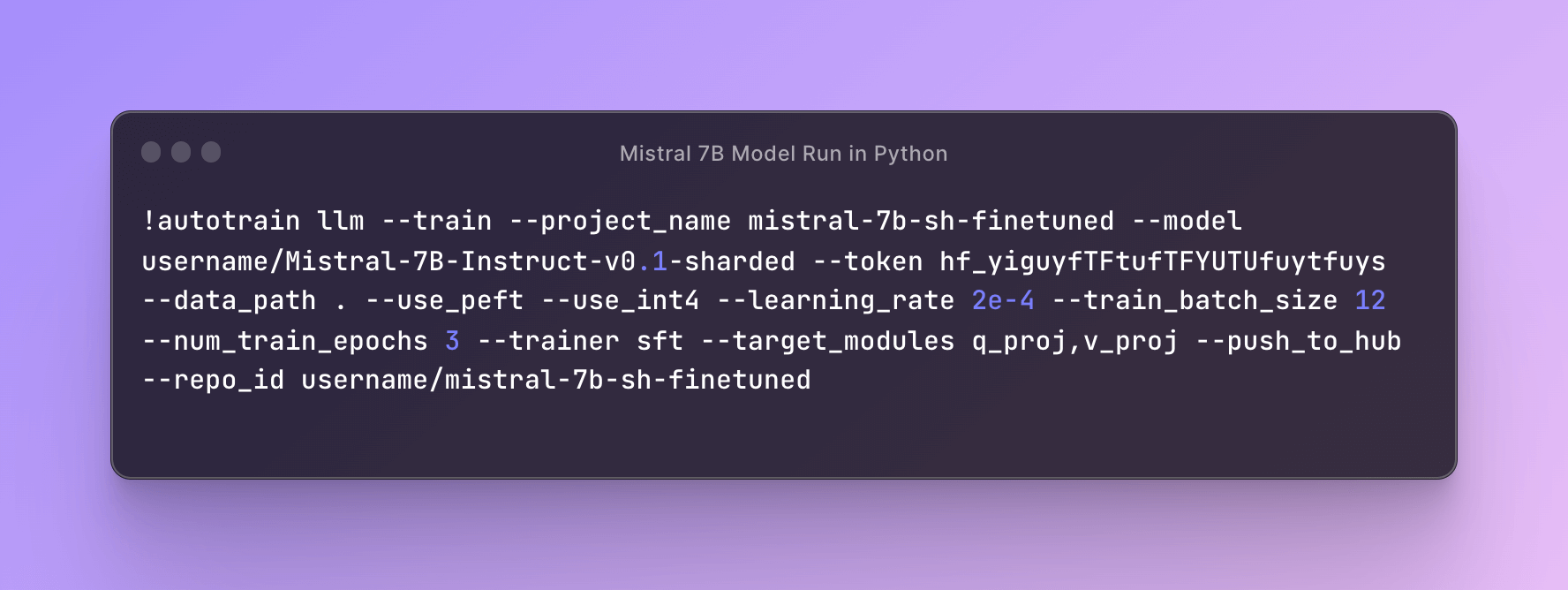

Here are the steps to fine-tune Mistral-7B for image prompt generation:

1. Setup Environment: Import libraries like torch, transformers, autotrain.

2. Get HuggingFace Token: Login to HuggingFace from the browser and copy the access token.

3. Train the Model: Run autotrain to fine-tune Mistral-7B on your dataset for a few epochs.

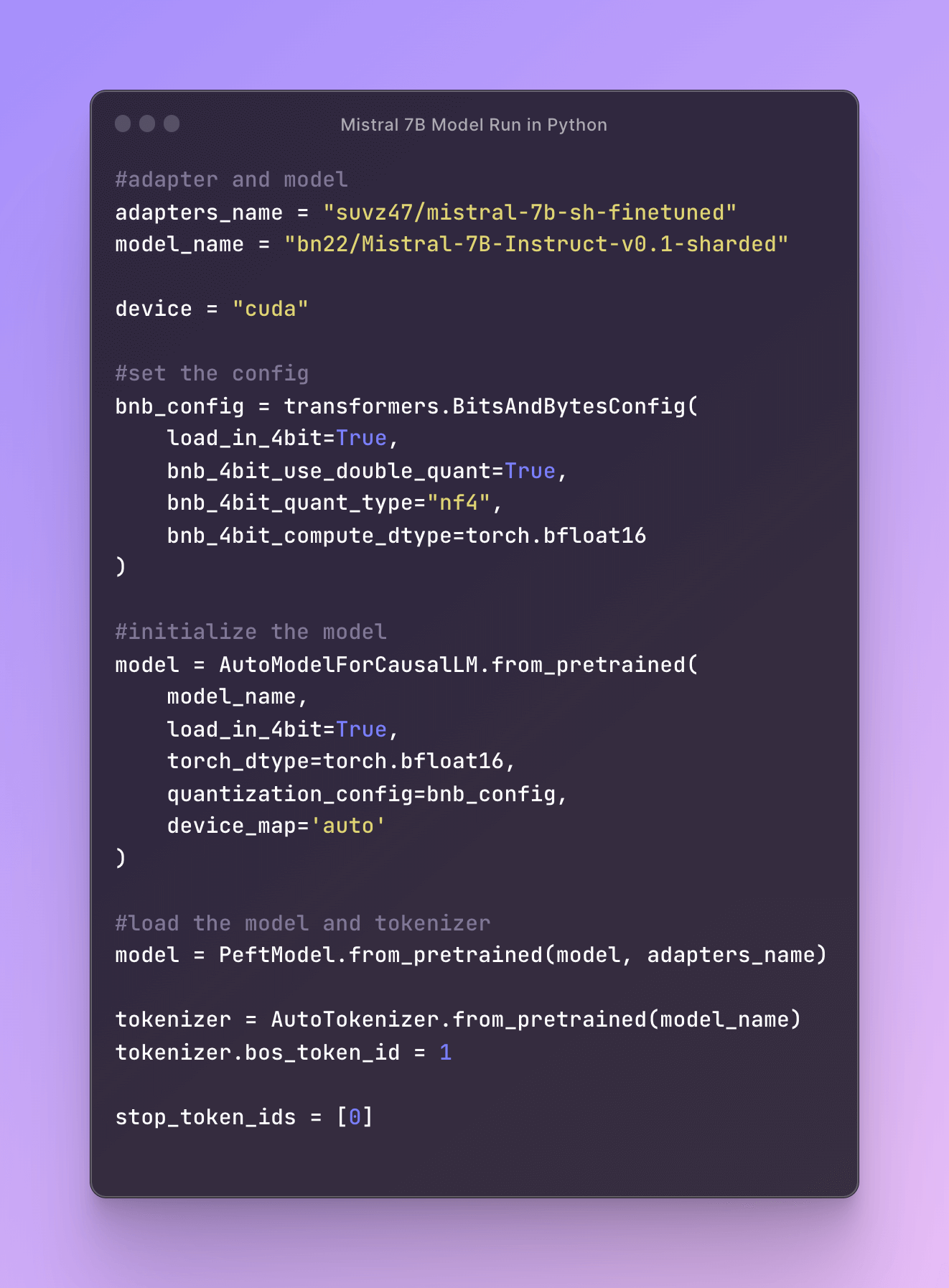

4. Load Fine-Tuned Model: Use the model and tokenizer for inference.

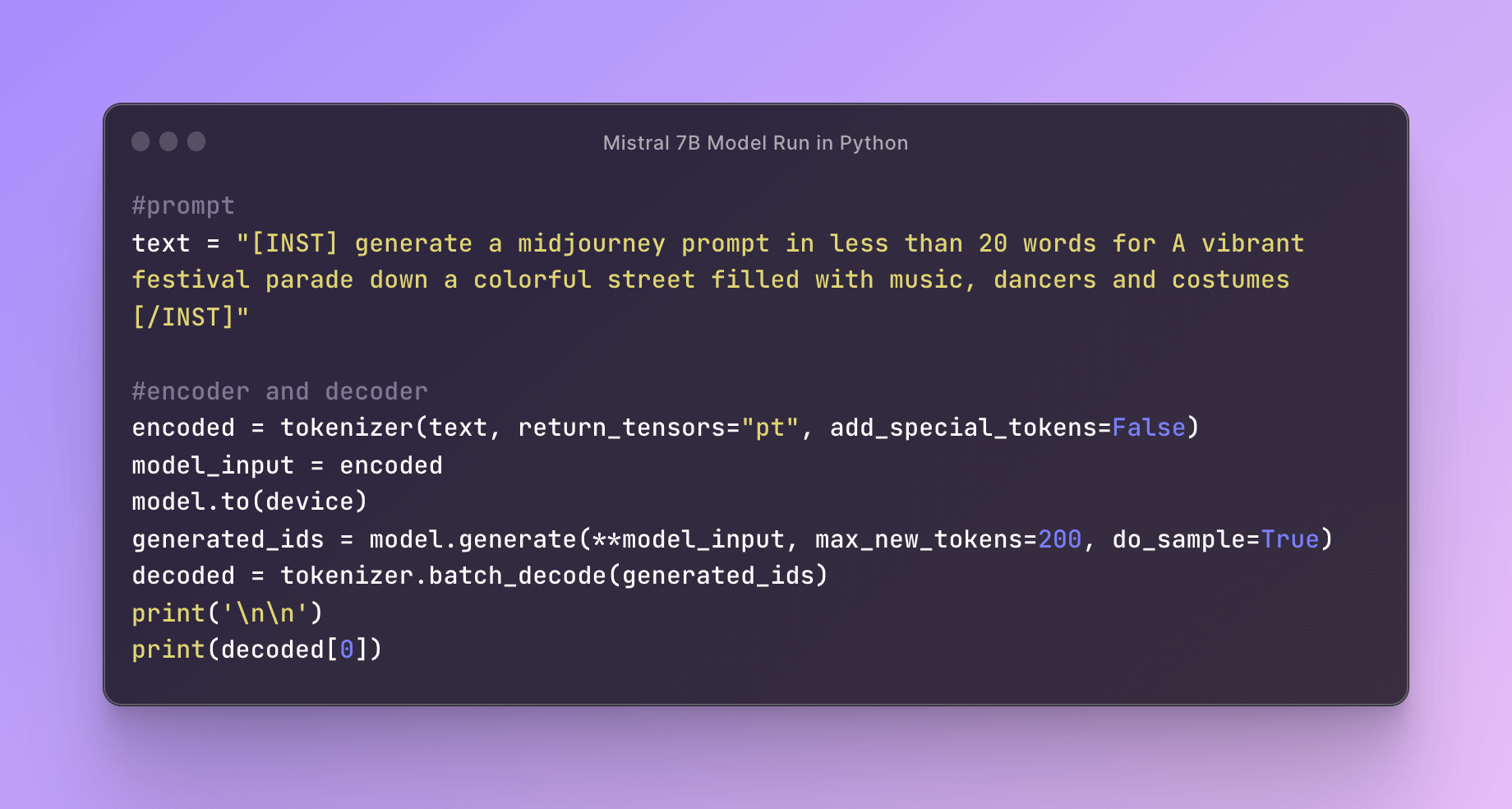

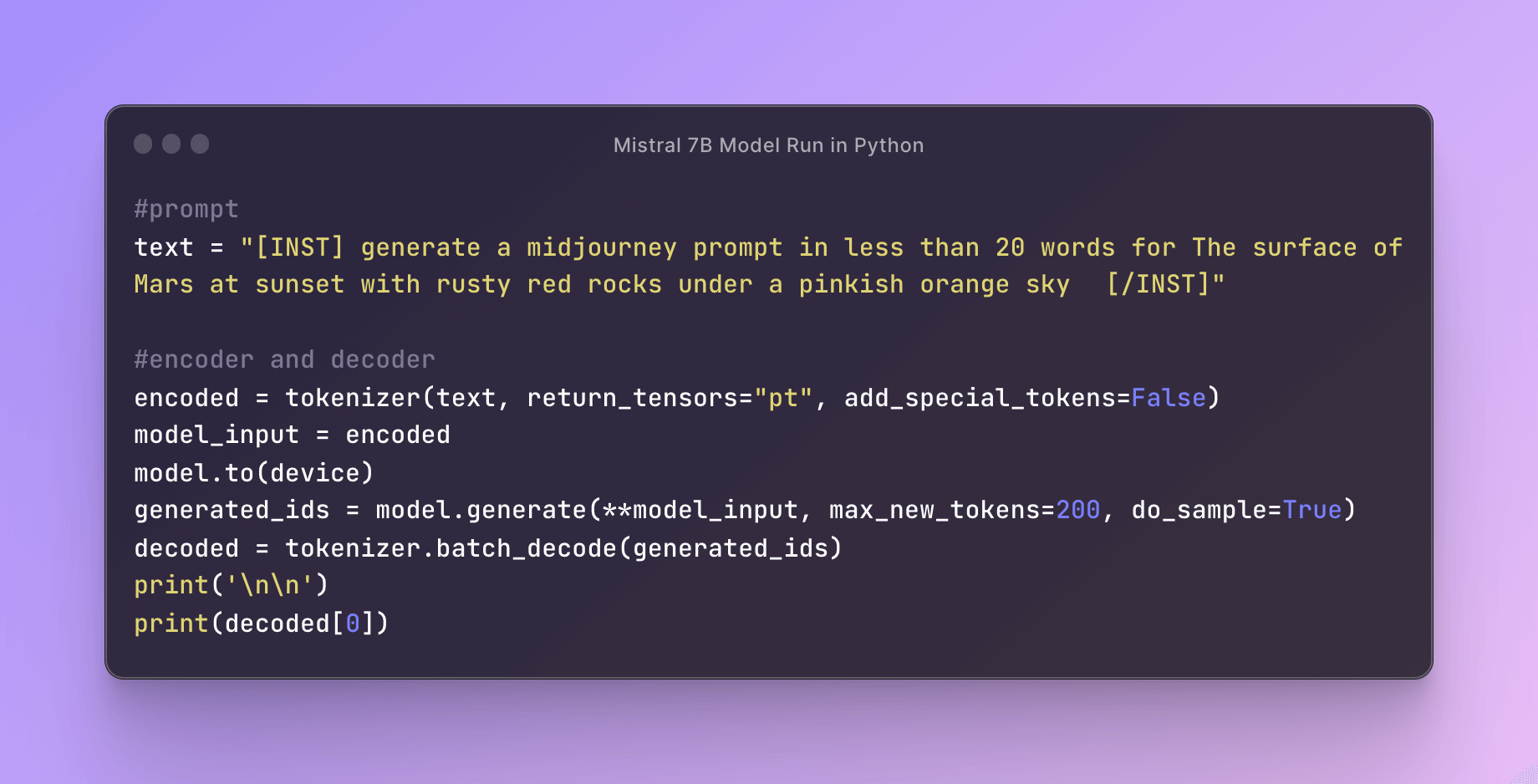

Generate Image Prompts: Input a few words and get detailed Midjourney-style descriptions.

Model Response: A joyous carnival cavalcade flows through lanes aglow with revelers in riotous robes dancing to drums.

Model Response: Mars alight at dusk, ochre crags silhouetted 'gainst vermilion heavens darkening to umber.

Key Takeaways

In summary, Mistral-7B delivers top-tier AI performance using fewer resources than larger models. Fine-tuning extends its powerful potential to any needed application by adding customized instructions. Its efficient, high-quality abilities make it a valuable tool for natural language tasks.

Here are some key Mistral-7B model takeaways:

✦ Mistral-7B delivers top-tier performance using fewer parameters than larger models. Its efficient architecture balances quality and resources well.

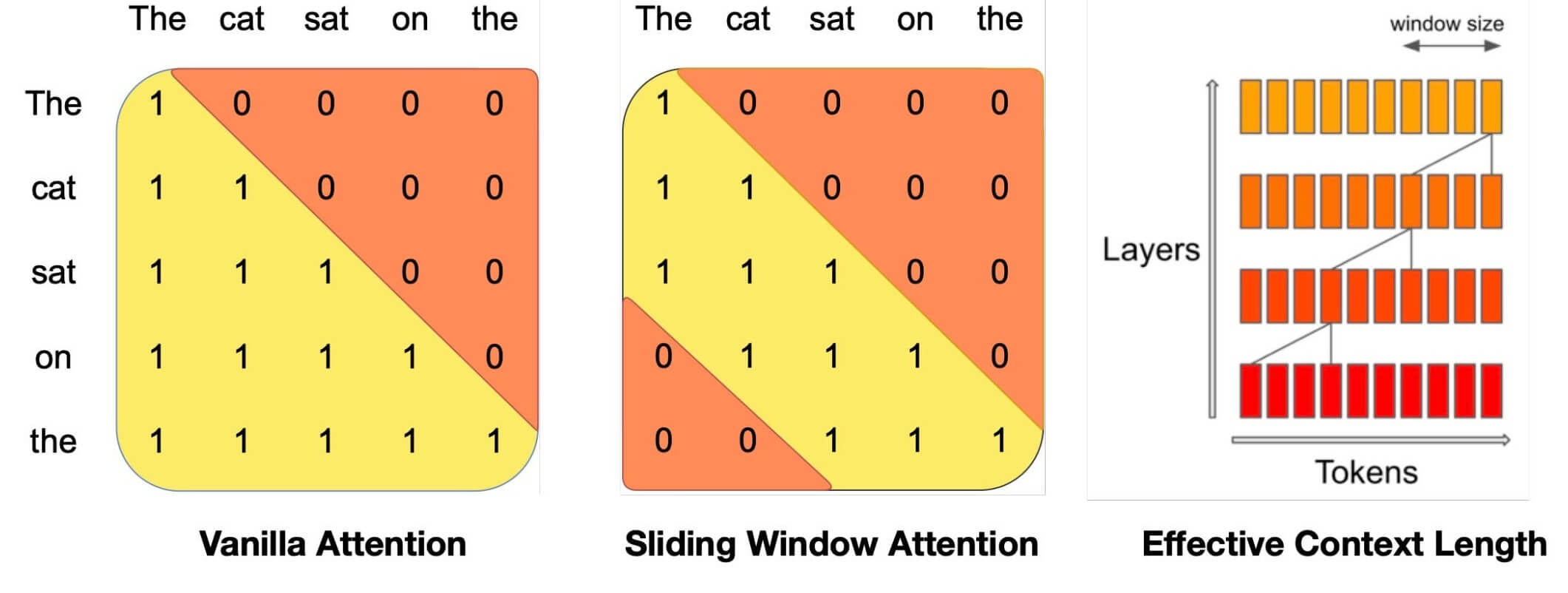

✦ Techniques like Sliding Window Attention, Flash Attention, and xFormers allow it to optimize long sequences and complete tasks much faster than expected for its size.

✦ The model shows versatility in tasks like translation, summarization, text generation, and more. Its abilities cover diverse natural language applications.

✦ Fine-tuning Mistral-7B allows adding custom instructions to extend its capabilities for specific needs. This was demonstrated by training it as an "image prompt engineer" to generate descriptive text from brief descriptions.

✦ With fine-tuning, the model's pre-trained knowledge and abilities can be customized for any language task. This was an example of how to repurpose Mistral-7B for a novel task.